2020. 3. 3. 04:42ㆍ카테고리 없음

- Found A Way To Sandbox Unity Osx Standalone For Mac

- Found A Way To Sandbox Unity Osx Stand Alones For Mac Free

- Found A Way To Sandbox Unity Osx Stand Alones For Mac Pro

That reminds me of something that happened when I was working at Adobe around 2002. A coworker saw me using PaintShop Pro to sort through a few photos, and he said, 'But. You work for Adobe!' I explained, 'I'd love to use Photoshop, but it fails to do the very first thing I need when I look at a batch of photos I shot in burst mode: flip back and forth between them to see which is best.' PSP let me flip between two photos instantly so I could see which I liked; Photoshop couldn't do that. Every time I used Ctrl+Tab to switch photos, it first drew a placeholder checkerboard across the entire screen, and then it slowly pulled in the new photo block by block.

Of course, there was probably some good technical reason for this: maybe some legacy Photoshop code designed for even older computers with little memory. But that didn't help me. The funny thing about your (valid!) complaint is that browsers are perfectly capable of doing the right thing for you when you replace one image with another - an instant visual swap. But instead, people add scrolling and fading and other effects just to show off how 'designy' their site is. Like iOS 12 very promising overall from the sound of it, and a genuine step forward from previous years in the fundamentals of speed and stability just as they promised to work towards. One continuing (though not new at all to the release) disappointment I have with Apple though: The end of OpenGL and OpenCL on the Mac A solid summary of the situation, but to me where Apple really deserves blame there is not having MoltenGL and MoltenVK (and perhaps some theoretical MoltenCL too) as 1st party projects.

I completely understand them wanting their own directly writable and controlled low level graphics language and having that be the interface layer. They are pretty vertically integrated and are now even doing their own GPUs, but even before that on the Mac they have long built the OS very strongly around GPU capabilities (for better and for worse, basic VM experience is very mediocre if you don't have a hardware GPU to passthrough). If anything I'm mildly surprised in retrospect Metal wasn't done earlier, OpenGL wasn't working for them and it's that big a deal.

Before there was The Division there was Snowdrop, a proprietary game engine designed over five years for Ubisoft's PC, Xbox One and PlayStation 4 games — and eventually, The Division.

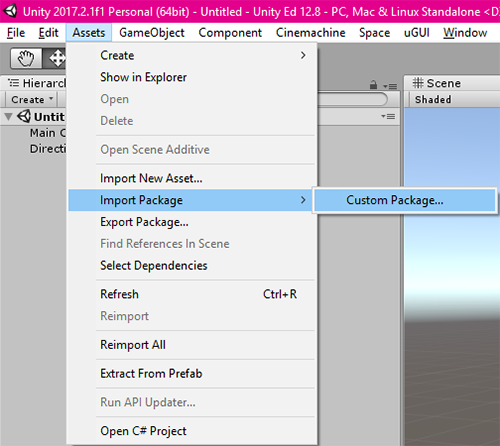

- Create a.plist file with the App-Sandbox entitlement It seems you can't just.

- Universe Sandbox ² is now available for Windows, macOS, Linux/SteamOS. Linux distributions supported by the Unity Engine (currently Ubuntu 12.04+). Greater complexity may be developed in the future, but it's a long way off. Instead of a Steam code, you will receive a standalone version of Universe Sandbox ².

Microsoft invested in their own graphics layer for Windows ages earlier. But controlling their own low level layer doesn't mean that higher level non-proprietary interfaces shouldn't still be supported on top. Part of the point should be to enable abstraction there. Apple is a big enough player and platform that this shouldn't be a zero-sum game, and their perfectly understandable strategic concerns addressed by Metal don't preclude OpenGL continuing to be officially supported as a layer on top of Metal. Apple could have even done it as another open source project of which they were the primary sponsor even if they wanted to manage it that way. This may be just another symptom of their unusual startup-type culture which lets them hyperfocus on specific products but reduces their corporate ability to multitask, but it's still a shame. It could have been just about supporting newer and better stuff going forward, not taking something away.

Even if MolenVK/MoltenGL ultimately fill some of that void I don't think that should have been relegated to 3rd party only. I don't blame them for this. They want to get out of OpenGL because how much of a maintenance nightmare it has become due to non technical concerns.

To ship top end OpenGL (and DirectX) drivers these days, it's more or less required that you write tons of code that recognizes the application that's calling your driver and fixes bugs in their code. Nvidia starting doing this, and now all end users see is that programs work on Nvidia and not AMD (or Apple as they write their own drivers), and blame everyone other than Nvidia. The switch to these new Mantle derived APIs is as much if not more about resetting those expectations of responsibilities than the technical benefits that these APIs provide (which AZDO 80/20 ruled most of the way to anyway). Supporting a MoltenGL as a first class item defeats the whole purpose of moving away from OpenGL to begin with from their perspective.

OpenCL never got the critical mass in their eyes, and someone else is already taking on MoltenVK. As someone who works on a game that is pretty popular I'm not sure I buy this. I'm certainly not aware of nVidia doing anything special for our game, and honestly, they haven't really been super easy to get help from when we have attempted to ask them questions about problems that are nVidia specific that we run into.

Since we are not a big publisher, it's super difficult to maintain a relationship. We will get assigned someone to talk to, and next time we actually have a problem that person will have quit / moved to a different department / stopped caring about us. Our game is in top 10 on steam right now, and is typically in the top 20 even during low periods, so I would have thought we would be somewhat higher up the priority list for specific attention if that was going to happen. So all of that is to say, we pretty much have to deal with the problems ourselves, not rely on nVidia to fix them for us. That being said, people complain about problems on AMD in our game all the time.

So why is that? Forget game specific optimisations, I think it's just that nVidia has just plain better drivers in general. When we are developing new engine features, the difference is pretty clear. If you naively implement a shader, it often runs faster on nVidia initially. It's usually possible to persuade the AMD drivers to run things just as fast, but it's totally non-obvious how. My gaming PC (2x AMD R9 290X, 8 GB video memory) is absolutely awful with a lot of (esp. Ubisoft) games like Assassins Creed or WatchDogs.

I just assumed the games weren't optimized for AMD hardware. That is based partly off the Nvidia logos that are shown during startup and in the graphical settings menu.

There's also graphical issues at high resolutions. I've been planning on buying an Nvidia GPU so I can actually play games at a decent frame rate. Are you telling me part (or all?) of the problem is bad drivers? I got the drivers from Windows Update rather than from AMD so they would just update themselves and be the correct ones. In my mind, the problem is bad code from developers like Ubisoft, and the willingness of Nvidia to expend tons of engineering effort to hack around them on a case by case basis. It's not really fair to call standards compliant drivers 'bad' in my mind. AMD pushed Vulkan (and Mantle previously) hard because it lets them break the cycle due to the layering concept in the driver stack.

Intrinsic in how those drivers work is allowing end users to stick API verification layers in at load time. This will allow AMD to go to the press and say 'see, it's not us, it's the application developer that wrote terrible code' without leaking any internal IP of those other shops. That is one of the reason why I think Metal is the right way to go. I can't understand how we will be able to sustain the current development of GPU, when more than half of the cost are in software drivers development and cost% is constantly moving up.

Another reason why the Desktop GPU market is so hard to break in, as have we seen times and times again, from 3Dfx with poor Direct X Drivers, S3, 3D Labs, Matrox, PowerVR etc. But I tried making this point with an Intel GPU engineers and he said my point is moot. May be he knows something I don't. Sounds like Nvidia understood what Microsoft understood.

An operating system is really only useful in that it allows you to run the software that you use. Because of this Microsoft went to extreme efforts to make sure when you upgraded your OS, your programs ran. Nvidia understands that you gamers buy graphics cards to run games on them. They just want their game to run and look good on the graphics card. They don't really care about how bad the game developers are and want efforts Nvidia had to take to get the game to run.

At the end of the day, games running good means happy customers. ”but to me where Apple really deserves blame there is not having MoltenGL and MoltenVK (and perhaps some theoretical MoltenCL too) as 1st party projects” Yeah, they should have learned from OS/2 and that other graphics subsystem it supported for compatibility (sorry, forgot what it was called) Foo on top of bar will always have incompatibilities and performance problems compared to foo on bare metal, but third parties will develop for it anyways if it gives them an easy path to a larger market, and the risk is that bar will become an implementation detail, rather than a competitive advantage.

Electron apps are a good recent example. I bet all major OS manufacturers would like to get rid of them, if it meant those applications ran on their OS and not on others. ending support for 32 bit apps deprecating openGL My opinion on APIs have gone from new and bleeding edge to 'support it till the sun burns out'.

Computers and meant to work. On linux or mac, no executable over 5 years old seems to work. But if download a.exe from the early 90's on a PC, it often just works. In 100 years, the windows API and.exe are going to be the lingua franca of programs. There are many indications this is happening, valve baking WINE into steam, the fragmented package managers on linux, and no common runtime environment taking off. I love my MacBook Pro. Really, I do.

And chances are, I'll probably use some iteration of a PC (in this case, I use an eGPU so I could replace my desktop entirely) until I, well, stop using hardware. I also use macOS and Windows on it. That being said, I will eventually die. Now, the question becomes what are the people that follow after me going to be using. I have kids in school: both use Chromebooks for school work, in one case a PS4 for gaming, and hand-me-down iPhones/iPhone SEs for social, gaming, and the like. Could they eventually need a PC? Possibly, depending on their career path/interests and the level of support for iPads, Chromebooks, etc.

But they don't need it now. And I know people who are my age and older who limit themselves to iPhones/iPads for technology beyond the TV.

None of these things are running Windows. I can only speak for the US market I guess, but they haven't found a real need for a PC/Mac outside of what a Chromebook can do, so at the end of the day, I don't feel it bodes well for PC/Mac long term. I'm a developer at work for internal applications (and some external). All of my non-server running things are web applications.

They could be accessed by Chromebooks instead of the hp Windows 7 laptops we use now. I doubt we'd switch off PCs due to familiarity at the very least, but we don't need them. But Core Storage, the technology responsible for keeping the most frequently accessed files on the SSD, can only see data on your drive at the block level, not the file level. It can’t tell the difference between an app that needs to be launched quickly or a document that doesn’t need a lot of extra speed; all it can see is how frequently blocks are accessed. APFS changes that. Fusion Drives can now move files to the SSD based not just on how frequently they’re accessed, but also based on how much the type of file will benefit from an SSD.

APFS will store all file metadata on your SSD, too, speeding up Spotlight searches and metadata lookups in the Get Info window. Can someone more knowledgeable than me explain how in the world this is an improvement? The old Core Storage model perfectly makes sense to me: it strictly operates beneath the level of a file system, combining multiple physical volumes into a logical volume family which contains a logical volume. It moves blocks between SSD and HDD using frequency of access. Now, why should we suddenly need to look at the type of file to determine which block device to store the data? Even if it's an application, but if it's not frequently used, why should we keep it on SSD? And filesystem metadata should be even more obvious.

Since basically all file operations need the directory entries and the inode information, the old Core Storage-based system will automatically move them to the SSD. Why do we need to explicitly tell the filesystem to do this? Overall this feels like a step backward to me. I like systems that are dynamic and self-adjusting, not those with hard-coded rules and heuristics. Also I'm a bit disappointed as the author, while producing a fine review to read, didn't quite do the deep-dive I had expected for a system that's arguably the most important in an OS—a system in charge of storing user data. Now, why should we suddenly need to look at the type of file to determine which block device to store the data?

We don't 'need' to, it's an extra optimization. Even if it's an application, but if it's not frequently used, why should we keep it on SSD? Because if we have the space to spare on the SSD, we'd appreciate the faster launch when we do try to use that app. And for other types of files it can now decide to move them or not move them based on the benefit from the faster load, not just the frequency of access (and thus optimize the SSD use). It's faster, but still incredulously slow.

For example, when you launch it it's just a black rectangle for 3-5 seconds (2018 Macbook Pro). And the UX is all over the place, with 3-4 different ways to go back/close whatever you're looking at.

The way you navigate reviews with side-swipes might sense on a phone but makes no sense whatsoever on the Mac. You can tell it's made to spec and with zero developer love softening the rough corners, because no developer who cares would make a full-screen screenshot view mode you cannot exit with Esc. The pop-up permission boxes don’t make a great first impression since I saw at least 5 of them within 2 minutes and they tended to have really obscure descriptions like access to “System Events”. While probably technically much more difficult, maybe they could have the first Mojave launch of every app occur in a separate and invisible “everything allowed” area, where the system just pretends to allow things and tracks everything that the app did on launch that would require permission.

And then, it can display one box with a summary of features that seem to be required for that app, relaunching the app in the real system sandbox if approved. I suppose this would be less of an issue if the first launch didn't try to open every single app/service that was running before the reboot all at the same time. This is frustrating behaviour in general when you have a bunch of apps open at work.

Found A Way To Sandbox Unity Osx Standalone For Mac

Slack will repeatedly attempt to steal focus on launch as it displays a splash screen and then the real app (a chat client requires a splash screen now!?), most apps using Sparkle for auto-updating will throw more prompts at you as new versions roll in, a different updater from Microsoft or Adobe will boot up to check on those installs.meanwhile all of them are trying to resize to full screen or appearing in some next-best position, because they're all competing for the foreground. You can't do shit until you're sure the computer's settled down. And that's before you get the extra permission prompts.

Found A Way To Sandbox Unity Osx Stand Alones For Mac Free

Yeah, maybe it's just a bow to practicality but if anything mildly surprised regardless because they've gotten quite serious about their own HSM implementation which is slowly starting to spread to more and more new Macs, and it's effectively universal under iOS. I sort of expected given initial thrusts like Apple Pay that they'd push hardware token usage way harder, with either direct support for Macs with T-series chips already or else some tie-in to iDevices. Ideally NitroStick/Yubikey/etc would come along for the ride and Apple would work towards a framework that could be used with other HSMs beyond their own, but at this point I'd take any major platform trying to move crypto auth forward over passwords/sms/anything else at all. But it can be a non-trivial amount of space, and (especially on SSD) that can matter. The first review I see for AppCleaner on the MAS right now is from a very happy user who just reclaimed 19 GB on their MacBook Air after a first run on a 4 year old machine. For that user, hard not to disagree that that matters. Also: 7 GB was from AirMail not deleting its 'junk'.

If that junk was in fact its internal cache of emails then that user has thought that those emails had gone with the app, while in fact they were still carrying them around. Now that could be a very big deal. (Unfair to assume, but a reasonable scenario). The same behavior may be present on Windows for all I know. I've used Win7 a handful of times and Win 10 once or twice, so I don't have a good handle on how the Registry deals with uninstalls these days.

My complaints re disk space are mostly still around because SSD prices are still not cheap compared to spinning disks. For example, I went from 80 GB (2005 Dell Latitude) to 120 GB (2007 MBP) to 256 GB (2013 MBP). Desktop drives at the time were probably triple to quadruple the storage at half the price, so it's still an issue. To migrate everything to an SSD in my next machine will take somewhere between 1-2 TB, and last I checked, the 1 TB option on a MacBook Pro was $600. Anyone else find dark mode stark? It was a bit shocking to see it and very jarring after almost 2 decades of looking at various shades of sliver/gray menubar and dock.

I now kind of miss the ability to have only dock, menubar, notifications side bar, spotlight and HUD for brightness, volume be black. This mode offered a great balance between classic window chrome and the too much brightness you now get in 'light' appearance mode. I find I can't work in dark mode during daytime. Way too many reflections and reading mail and PDFs in preview are too jarring.

Black, beside white makes each other stand out so much. I think macOS is now least polished it has ever been. There's no reason to stay on 10.12 to avoid APFS. I tried a fresh install of 10.13 with APFS on a hard drive. I wasn't happy at all with the performance especially at boot (for some reason). So I started over and went with HFS+. I have no idea if APFS on Mojave behaves better but if it doesn't force the file system upgrade (didn't check this yet) I see no reason to avoid Mojave.

Found A Way To Sandbox Unity Osx Stand Alones For Mac Pro

APFS would make a lot more sense on an SSD but even with 0 improvement (excluding visible performance drops here) at storage level the other features alone should make the upgrade worth it.